Basics of Naive Bayes Algorithm in Data Science - Definition,Advantages, Disadvantages, Applications, Basic implementation

WHAT

IS NAIVE BAYES?

2. Assumes that the

presence of a particular feature in a class is unrelated to the presence of any

other feature.

3. Based on Bayes

theorem of conditional Probability.

For example, Having a Coco Cola after eating popcorn.

Let's say P(pop)= Probability of eating popcorn.

P(coco)= Probability of drinking Coco Cola

P(coco/pop)= Probability of having Coco Cola after eating

popcorn.

WHY IT IS CALLED AS NAIVE?

It is called as Naive because the output may or may not turn out to be correct.

(You will understand that in the basic implementation section.)

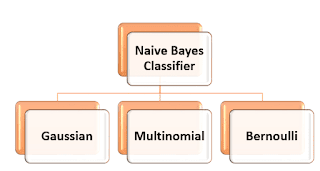

1. Gaussian Naive Based Classifier

It is used for continuous values.

It follows Normal Distribution

2. Multinomial

It is used for discrete counts.

3. Bernoulli Naive Bayes

This classifier is used for binary vectors.

ADVANTAGES

1. Fast

2. Highly scalable.

3. Used for binary and

Multi class Classification.

4. Great Choice for text

classification.

5. Can easily train

smaller data sets.

DISADVANTAGES

Naive Bayes considers that the features are independent of each other. However in real world, features depend on each other.

APPLICATIONS

1. Text classification

2. Spam filtering.

3. Sentiment Analysis.

4. Real time prediction.

5. Multi class

prediction.

6. Recommendation system

BASIC

IMPLEMENTATION OF GAUSSIAN NAIVE BAYES

sklearn is the package which is required to implement Naive Bayes.

Before that you should have NumPy and SciPy packages in the system.

Program

#Import Library of Gaussian Naive Bayes model import imp

from sklearn.naive_bayes import GaussianNB

import numpy as np

#assigning predictor and target variables

#In this example, we have given various inputs to train the model

#if a person gets marks below 10 then it will be printed as fail that is

'f'

#else it will print pass that is 'p'

marks= np.array([[15,20,10],[17,15,18], [7,0,10], [12,10,20], [22,10,4],

[14,20,12], [11,11,11], [17,16,15], [12,20,4], [12,17,23], [12,6,7],

[12,16,12]])

result= np.array(['f','p','f','f','f','p','p','p', 'f','p','f',

'p'])

#Create a Gaussian Classifier

model = GaussianNB()

# Train the model using the training sets

model.fit(marks,result)

#Predict Output

prediction= model.predict([[17,16,7]])

print('if the input is ([[17,16,7]])')

print(prediction)

prediction= model.predict([[1,6,7]])

print('if the input is ([[1,6,7]])')

print(prediction)

#NOTE: EVEN IF BELOW MARKS ARE GREATER THAN 10, IT STILL SHOWS

#FAIL.THE REASON FOR THIS IS THAT WE HAVE NOT GIVEN ENOUGH #INPUTS

TO COMPLETELY TRAIN A MODEL

prediction= model.predict([[20,15,13]])

print('if the input is ([[20,15,13]])')

print(prediction)

#HOWEVER, IF YOU TAKE THE INPUTS THAT WE HAVE ALREADY TAKE #INTO CONSIDERATION, THEN IT IS GOING TO SHOW PASS

prediction= model.predict([[17,15,18]])

print('if the input is ([[17,15,18]])')

print(prediction)

prediction= model.predict([[12,16,12]])

print('if the input is ([[12,16,12]])')

print(prediction)

Output

if the input is ([[17,16,7]])

['f']

if the input is ([[1,6,7]])

['f']

if the input is ([[20,15,13]])

['f']

if the input is ([[17,15,18]])

['p']

if the input is ([[12,16,12]])

['p']

MORE DATA SCIENCE BLOGS CLICK HERE